The explosion of generative AI has given marketing teams unprecedented power to clean up, perfect, and even reinvent visual assets. For agencies managing client social media accounts, this creative tool presents a high-stakes challenge: When does an “enhancement” become a “misrepresentation”?

The answer, according to global authorities like Meta, Google, and advertising standards bodies, lies in one critical concept: The Materiality Rule.

If an AI-driven change materially alters what a consumer understands about the product, service, or environment, transparency is not optional—it is mandatory for compliance, especially in advertising.

Much of the guidance from Google and Meta is focused on the US market. Within the UK the Advertising Standards Authority is the regulator for all advertising. Whilst the Codes do not contain AI-specific rules, the rules and principles apply however the content is generated or targeted.

There is no legal requirement in the UK to disclose the use of AI in advertisements. However, in addition to complying with UK regulations, any organisation using platforms such as Google or Meta must adhere to their respective terms of service. This article primarily discusses the use of AI-generated assets in paid advertising and social media platforms.

When working with a social media or Google Ads PPC agency, it is advisable to exercise caution regarding any offer to create video and images using AI.

1. The Acceptable Zone: Transient Clean-up

Not all AI edits require a warning label. Using AI tools to remove temporary, non-permanent artifacts is permissible without mandatory disclosure, much like traditional photo retouching.

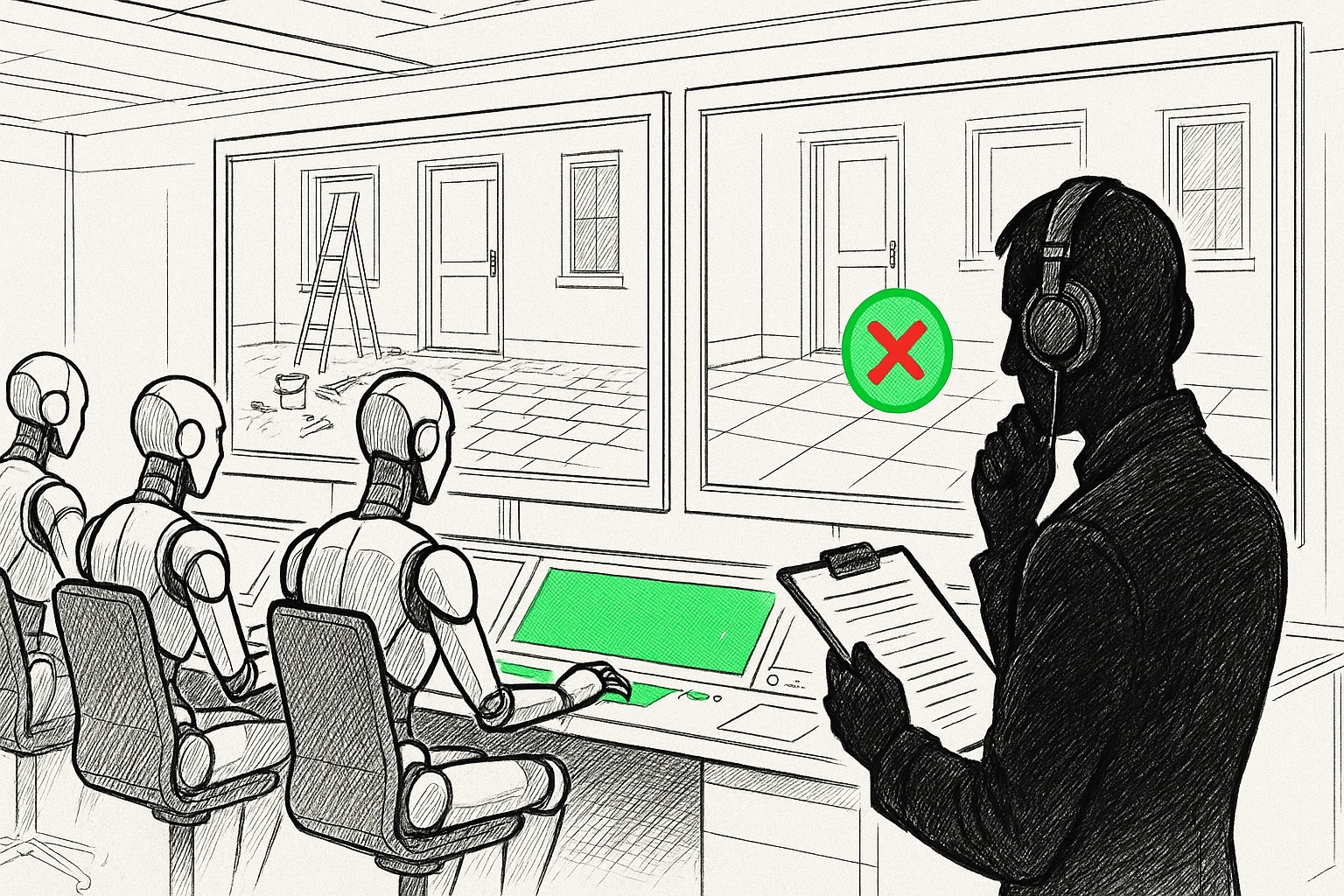

Example: Removing a Ladder or Debris

If your home improvement client has installed a set of patio doors, and a ladder or tools were left leaning against the wall, using AI to digitally remove them is considered an acceptable, minor edit.

Why it’s safe: This change is classified as a minor visual enhancement or “productivity edit” and does not fundamentally alter the finished product, its quality, or the final scope of the service provided [https://blog.youtube/news-and-events/disclosing-ai-generated-content/]. You are simply removing elements that were transient and irrelevant to the final outcome.

2. The Danger Zone: Material Misrepresentation

The risk skyrockets when AI is used to alter a permanent feature or a fundamental element of the scene. This is where an edit becomes a misrepresentation, particularly in paid advertisements.

Example: Replacing a Dirty Patio with a Clean One

If the client’s scope of work was installing the custom railing but did not include cleaning, replacing, or improving the surrounding patio, using AI to swap a dirty patio for a pristine one crosses the compliance line

Why it’s high-risk: This is a material alteration because it falsely depicts the environment and the aesthetic outcome delivered by the client. Platforms require disclosure when content “Alters footage of a real event or place” or “Generates a realistic-looking scene that didn’t actually occur”.

In the context of advertising, undisclosed material changes violate core integrity principles prohibiting deceptive practices. For paid campaigns, this high risk of misleading consumers can result in severe penalties, including ad rejection or permanent account suspension on platforms like Google Ads.

3. The Deepfake Liability: Synthetic People

The highest level of risk involves the creation of realistic human likenesses. Introducing AI-generated figures who are speaking or moving around the scene triggers the most stringent disclosure policies, similar to those for deepfakes.

Example: An AI Spokesperson Delivering a Testimonial

If you use a realistic, moving, or speaking synthetic figure to offer a testimonial or suggest client satisfaction with the home improvement job, this can be classified as a fraudulent endorsement.

Why disclosure is mandatory: Platforms universally require disclosure if the content “Makes a real person appear to say or do something they didn’t do”. If the figure appears realistic, the lack of disclosure facilitates a misleading claim of authenticity.

The Compliance Strategy: To minimize risk, you must either:

- Use only clearly stylized or unrealistic figures (e.g., cartoons or fantastical animations) .

- If realism is required, you must implement multi-layered disclosure (platform native label + clear caption overlay) .

The Core Takeaway: The Two-Part Transparency Mandate

For agencies to safely navigate this landscape, transparency must be viewed as a mandatory operational standard. We cannot rely solely on platform detection systems; internal governance is essential.

Our action items for any AI-enhanced client asset:

- Apply the Materiality Rule: If the AI enhancement fixes, masks, or exaggerates the physical condition, quality, or environment of the advertised service, explicit disclosure is required

- Layer the Disclosure: For content that meets the Materiality Rule, use both the native platform disclosure tools (e.g., Meta’s “AI info” or YouTube’s ‘altered content’ setting) and a clear textual disclaimer in the caption (e.g., “Patio digitally enhanced for visual purposes”).

By adhering to this rigorous standard, we ensure that our creative use of AI remains compliant with platform policies and, most importantly, maintains trust with the consumer.